Enterprises across all major industries adopt Apache Hadoop for its ability to store and process an abundance of new types of data in a modern data architecture. This “Any Data” capability has always been a hallmark feature of Hadoop, opening insight from new data sources such as clickstream, web and social, geo-location, IoT, server logs, or traditional data sets from ERP, CRM, SCM or other existing data systems.

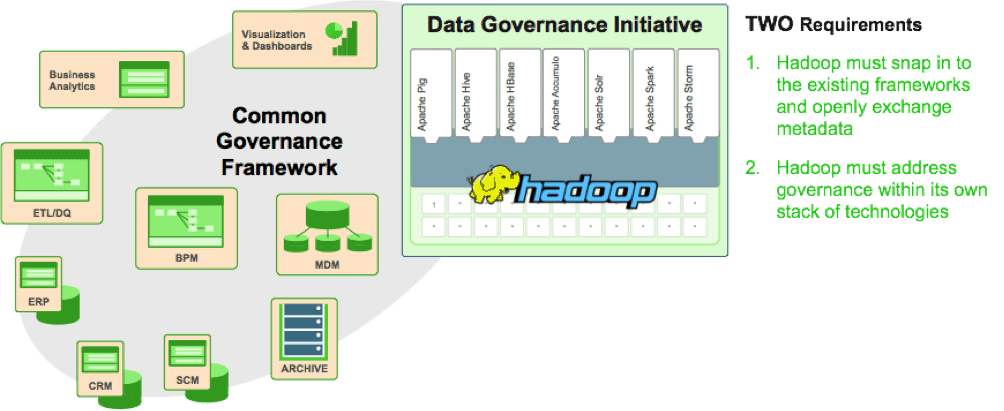

But this means that enterprises adopting modern data architecture with Hadoop must reconcile data management realities when they bring existing and new data from disparate platforms under management. As customers deploy Hadoop into corporate data and processing environments, metadata and data governance must be vital parts of any enterprise-ready data lake.

For these reasons, we established the Data Governance Initiative (DGI) with Aetna, Merck, Target, and SAS to introduce a common approach to Hadoop data governance into the open source community. Since then, this co-development effort has grown to include Schlumberger. Together we work on this shared framework to shed light on how users access data within Hadoop while interoperating with and extending existing third-party data governance and management tools.

A New Project Proposed to the Apache Software Foundation: Apache Atlas

I am proud to announce that engineers from Aetna, Hortonworks, Merck, SAS, Schlumberger, Target and others have submitted a proposal for a new project called Apache Atlas to the Apache Software Foundation. The founding members of the project include all the members of the DGI and others from the Hadoop community.

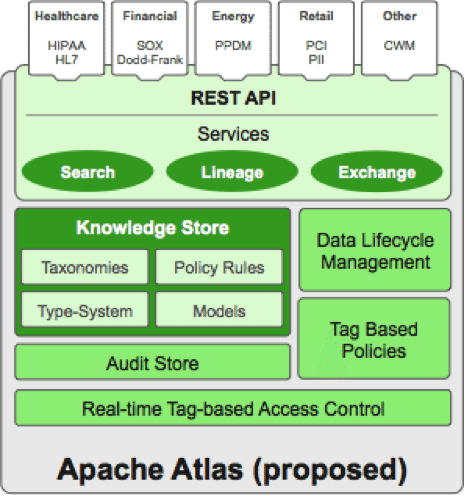

Apache Atlas proposes to provide governance capabilities in Hadoop that use both a prescriptive and forensic models enriched by business taxonomical metadata. Atlas, at its core, is designed to exchange metadata with other tools and processes within and outside of the Hadoop stack, thereby enabling platform-agnostic governance controls that effectively address compliance requirements.

The core capabilities defined by the project include the following:

- Data Classification – to create an understanding of the data within Hadoop and provide a classification of this data to external and internal sources

- Centralized Auditing – to provide a framework for capturing and reporting on access to and modifications of data within Hadoop

- Search and Lineage – to allow pre-defined and ad-hoc exploration of data and metadata while maintaining a history of how a data source or explicit data was constructed

- Security and Policy Engine – to protect data and rationalize data access according to compliance policy.

The Atlas community plans to deliver those requirements with the following components:

- Flexible Knowledge Store,

- Advanced Policy Rules Engine,

- Agile Auditing,

- Support for specific data lifecycle management workflows built on the Apache Falcon framework, and

- Integration and extension of Apache Ranger to add real-time, attribute-based access control to Ranger’s already strong role-based access control capabilities.

Why Atlas?

Atlas targets a scalable and extensible set of core foundational governance services – enabling enterprises to effectively and efficiently meet their compliance requirements within Hadoop while ensuring integration with the whole data ecosystem. Apache Atlas is organized around two guiding principals:

- Metadata Truth in Hadoop: Atlas should provide true visibility in Hadoop. By using both a prescriptive and forensic model, Atlas provides technical and operational audit as well as lineage enriched by business taxonomical metadata. Atlas facilitates easy exchange of metadata by enabling any metadata consumer to share a common metadata store that facilitates interoperability across many metadata producers.

- Developed in the Open: Engineers from Aetna, Merck, SAS, Schlumberger, and Target are working together to help ensure Atlas is built to solve real data governance problems across a wide range of industries that use Hadoop. This approach is an example of open source community innovation that helps accelerate product maturity and time-to-value for the data-first enterprise.

Stay Tuned for More to Come

The proposal of Apache Atlas represents a significant step on the journey to addressing data governance needs for Hadoop completely in the open.

Seetharam Venkatesh and I are talking about the Data Governance Initiative and the Apache Atlas project proposal at Hadoop Summit Summit Europe in Brussels.

After the event, we will be back with more on the exciting progress of Apache Atlas!

The post Apache Atlas Project Proposed for Hadoop Governance appeared first on Hortonworks.